How Does Content Testing Select a Winner? What is the Bandito Algorithm?

In this guide, we’ll walk through an experiment in Bandito to watch how the test converges on a winner. As discussed in the Content Testing Overview, Bandito implements a Multi-Armed Bandit content test. Bandito decides which variant to serve based on a combination of two scores – one that represents Exploitation and the other that represents Exploration.

In Bandito, the exploitation score is measured through CTR. The CTR is calculated as the number of clicks on a piece of content over the number of serves. CTR is calculated for each variant individually. If one variant was served 100 times and clicked 50, its CTR is 0.5. If another variant was served 50 times and clicked 40 times, it’s CTR is 0.8. This second variant has a higher CTR even though it was clicked fewer times because it is calculated out of the number of times each individual variant was served.

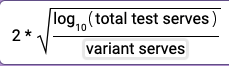

The exploration score is measured through variance. In Bandito, we’ve defined variance as:

|

This equation aims to measure how confident the experiment can be in the CTR of a given variant. It does this by comparing the number of times the variant has been served (denominator above) to the number of times the content has been served with any variant (total test serves; numerator above). As the experiment continues, both of these numbers will increase. This means that as a variant is shown more, its individual variance will go down. As the experiment goes on, variance across all variants will go down.

The variance is considered the measure of exploration. The less we know about the variant, the more time the algorithm will devote to exploring it and learning more about it.

The total score is the exploitation (CTR) + exploration (variance).

Bandito calculates the total score each time it needs to decide which variant to serve to a customer. After calculating the total score for each variant, it always chooses the highest value. If two scores are identical, Bandito will pick randomly which to serve.

Algorithm walk-through

Now that we have a high-level sense of the algorithm in use, let’s walk through a detailed example of it action.

The numbers below are made up but give an overall sense of how the algorithm works.

Let’s first declare a couple of assumptions:

This test has two Variants, A and B, that vary the headline of a piece of content.

The walkthrough begins when each variant has been served exactly 1 time and neither was clicked on.

Beginning in step 1, we can see that each variant has been served once, and neither has been clicked. Because of this, CTR for each of 0.0. The variance is also the same as each variant has the same number of serves (1). This results in equal total scores, and A is chosen at random.

Step | Total Serves | CTR (A) | CTR (B) | Variance (A) | Variance (B) | Score (A) | Score (B) | Result |

|---|---|---|---|---|---|---|---|---|

1 | 2 | 0/1=0 | 0/1=0 | log(2)/1=0.3 | log(2)/1=0.3 | 1.1 | 1.1 | Equal scores. A chosen at random. |

2 | 3 | 1/2=0.5 | 0/1=0 | log(3)/2=0.24 | log(3)/1=0.48 | 1.98 | 2.1 | B is chosen. |

... | ||||||||

3 | 200 | 45/130=0.34 | 6/70=0.26 | log(200)/130=0.04 | log(200)/70=0.08 | 0.74 | 0.64 | A is chosen. |

Let’s jump to step 2. On the next serve, variant A has been served an additional time and was successfully clicked. With this click, the CTR of Variant A is now 0.5, giving it a much higher CTR than variant B. It has also been served twice, though, to Variant B’s once, so Variant B is ahead in Exploration score. At the end of the step, even though Variant A is seemingly performing better, Variant B is still chosen. At this point, the experiment is early enough that variance is high enough to swing the results.

Now, let’s jump even further ahead in Step 3, where a total of 200 serves have made it out. As predicted, variant A is continuing to perform better, with a CTR of 0.34 to B’s 0.26. We can also see in this step that variance scores have dropped considerably – now low for each variant, and continuing to drop for each serve. The variance is low enough that it’s not enough to serve variant B any longer, so A is chosen.

At this point, there are three potential outcomes:

If trends continue, eventually Variant A will be routed nearly all traffic.

If Variant A’s CTR drops for any reason, Variant B could start to see some serves.

A new Variant can be introduced.

Introducing a new variant

One of the benefits of Bandito over a traditional A/B Test is the ability to introduce a new variant at any point. Let’s see what happens if we continue our experiment above by introducing a new variant, C.

Step | Total Serves | CTR & Variance (A) | CTR & Variance (B) | CTR & Variance (C) | Score (A) | Score (B) | Score (C) | Result |

|---|---|---|---|---|---|---|---|---|

4 | 201 | 45/131 + log(201)/131 | 6/70 + log(201)/70 | 0/0 + log(201)/0 | 0.75 | 0.64 | NaN | C is chosen |

5 | 202 | 45/131 + log(202)/131 | 6/70 + log(202)/70 | log ( 202 ) / 1 = 2.3 | 0.75 | 0.64 | 5.6 | C is chosen |

... | ||||||||

6 | 500 | 90/200 + log(500)/200 | 6/70 + log(500)/70 | 120/230 + log(500)/230 | 0.8 | 0.64 | 0.85 | C is chosen |

In this walk through, we see what happens when you suddenly add a variant and no information about it is known. With such a high variance (low confidence) about the variant, the experiment will begin to serve the new variant C at a high rate. In this case, good thing. By the time we have 500 serves, it’s doing even better than Variant A previously was.

Selecting a winner

Bandito declares a winner to an experiment when a single variant has has a significantly higher CTR for a period of five minutes.

On a 20 second interval, Bandito calculates the CTR of each variant in a test. It then performs a statistical significance test to see if the variant with the highest CTR is just performing better, or if it is statistically significantly better. As a test takes places, especially in its early stages, variants will likely jump around the leading spot, each edging each other out slightly. This significance test ensures that the difference in CTR between two variants is actually meaningful and not just noise in the data.

Once a variant is significantly better, Bandito declares the experiment as converging. This means that, given no changes, Bandito expects the test to settle on a winner. A winner is declared after the same variant has been significantly outperforming the others for a period of 5 minutes.

If at any point during the convergence period another Variant begins to perform better than the converging variant, Bandito is smart enough to stop convergence and wait for it to start over. This is often seen when a new variant is added mid-experiment.